In a world dominated by the buzz around powerful GPUs for AI tasks—especially image generation—it’s easy to feel left out if you don’t have the latest and greatest graphics card. Let’s face it, GPU prices can be eye-watering, making cutting-edge AI accessible only to a select few.

But what if I told you there’s a way to tap into the latent potential of your trusty CPU for generating stunning images?

While it’s true that GPUs are the reigning champions for parallel processing, making them ideal for the computationally intensive nature of diffusion models, your CPU still packs a punch! This blog explores the challenges of running image generation on a CPU and how clever techniques like Latent Consistency Models (LCM) and optimization libraries like OpenVINO can help us overcome them.

Why Even Try CPU Image Generation?#

We’re at a point where even mid-tier GPUs are priced like high-end luxury items. If you’re a hobbyist, student, or just someone curious about AI art, it might not make sense to splurge on hardware right away. So, if you’re stuck with just a CPU, why not see what it can do?

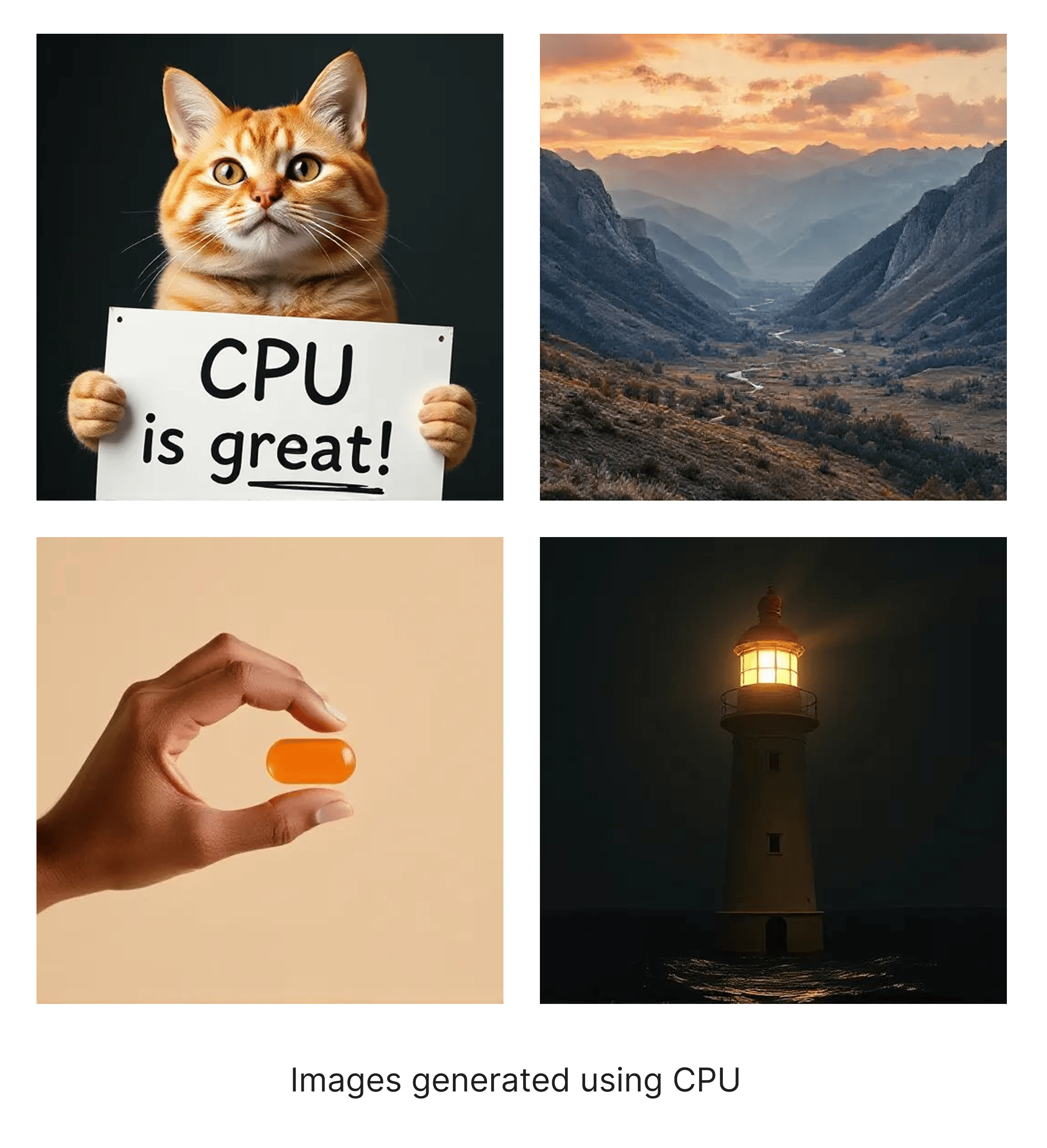

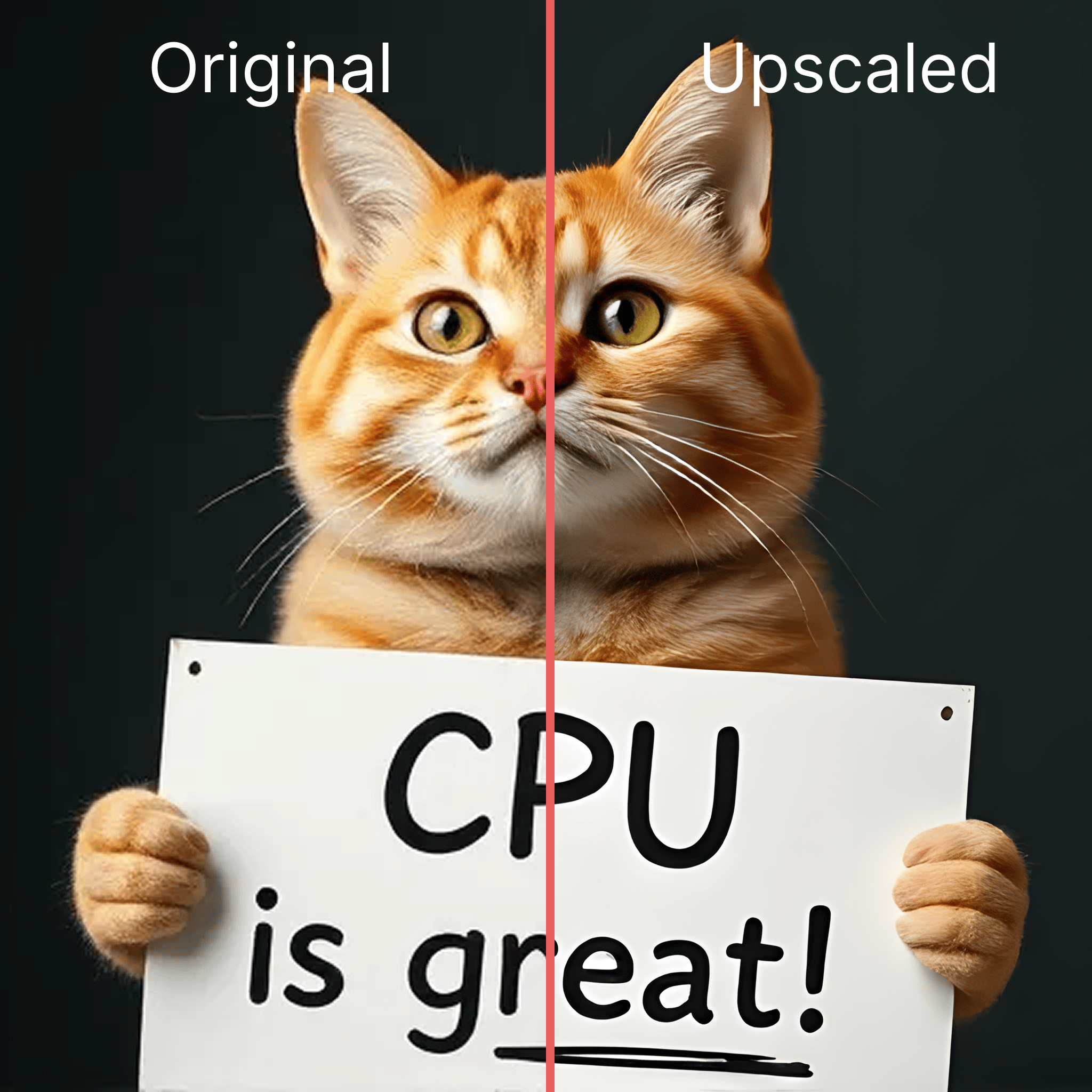

Spoiler alert: it is better than you think. Just look at the samples

The Challenges of CPU Image Generation#

Running diffusion models like Stable Diffusion on CPU presents some real bottlenecks:

- Lack of parallelism: CPUs can’t handle the massive matrix multiplications as quickly as GPUs.

- Memory bandwidth: Image generation requires moving large tensors around; CPUs just aren’t optimized for that.

- Precision trade-offs: Most CPU inference needs quantized models (like int4) to be even remotely usable.

What this means practically is: generating a single 512x512 image can take several minutes, and anything larger is a slog.

Overcoming Challenges#

Fortunately, the AI research community is constantly innovating, and two key advancements are making CPU-based image generation more feasible:

- Latent Consistency Models (LCM): LCMs are a distilled version of traditional diffusion models. They are trained to directly predict the final output in significantly fewer steps (often just 4-8 steps compared to 20-50+ in standard models). This drastically reduces the computational cost and, consequently, the generation time. While there might be a slight trade-off in terms of ultimate image quality or fine-grained control, the speed gains are substantial.

- OpenVINO: Developed by Intel, OpenVINO is an open-source toolkit designed to optimize and deploy AI inference across various Intel hardware, including CPUs. It provides tools for model conversion, quantization (reducing the precision of numerical representations, like from float32 to int8 or even int4), and efficient execution. By optimizing the diffusion model for your specific CPU architecture, OpenVINO can significantly accelerate the generation process.

The CPU’s Moment to Shine#

Inspired by the need to democratize AI image generation and leverage existing hardware, I started experimenting with optimized diffusion models on CPU. That’s when I found the excellent fastsdcpu repo by @rupeshs—an efficient implementation for running Stable Diffusion on CPUs.

To make it even easier to use, I created a docker image based on that repo. This allows you to spin up an image generation environment in an isolated and reproducible manner, without worrying about complex local setups.

Getting Started#

Use the following compose.yml file:

services:

fastsd:

image: focusbreathing/fastsd-cpu:1.0.0-beta

restart: unless-stopped

build:

context: .

ports:

- 7860:7860

volumes:

- ./cache:/root/.cache/huggingface

- ./results:/usr/src/app/results

Run the docker container. (note: image size is ~10GB so be patient while it is being pulled)

docker compose up -d

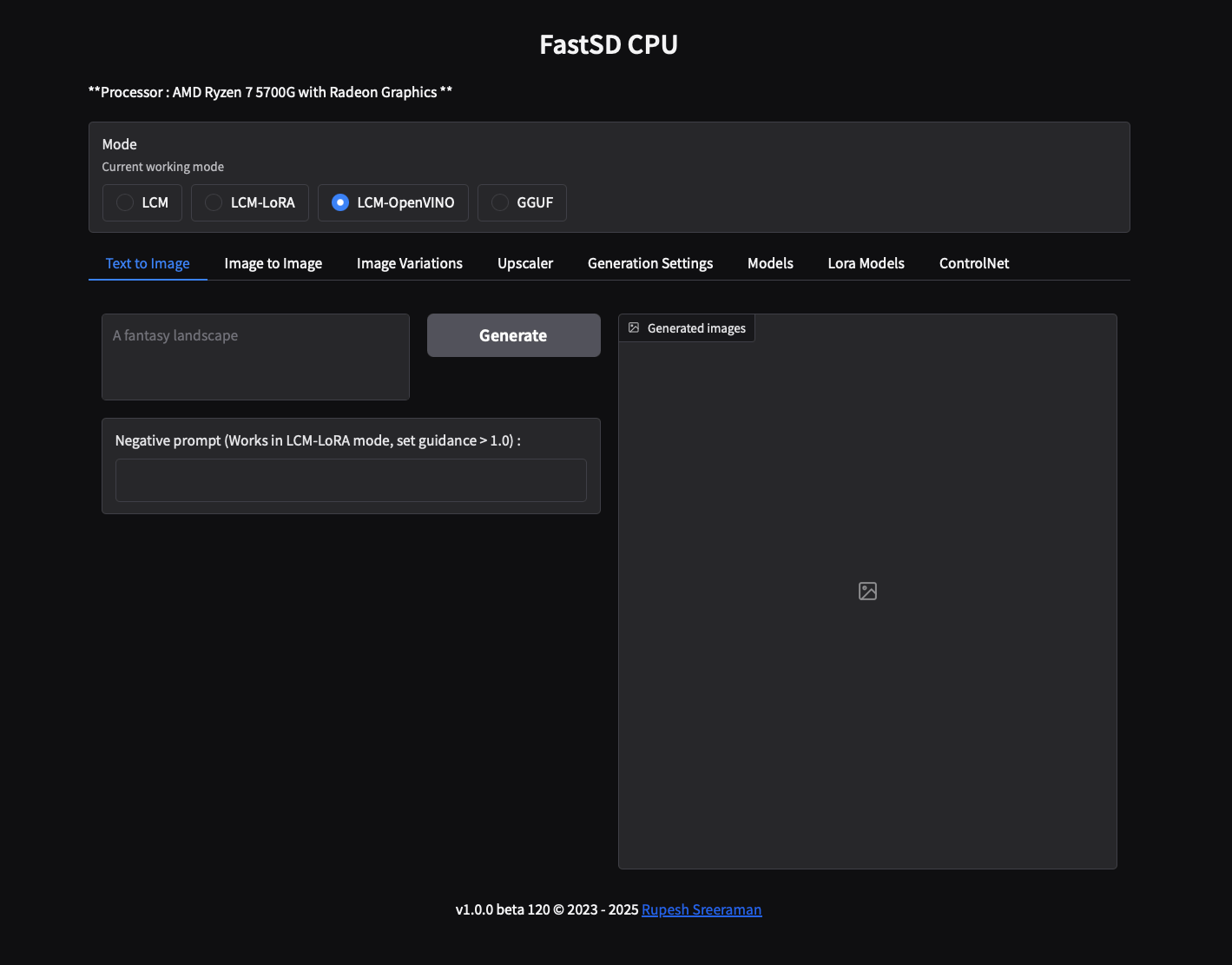

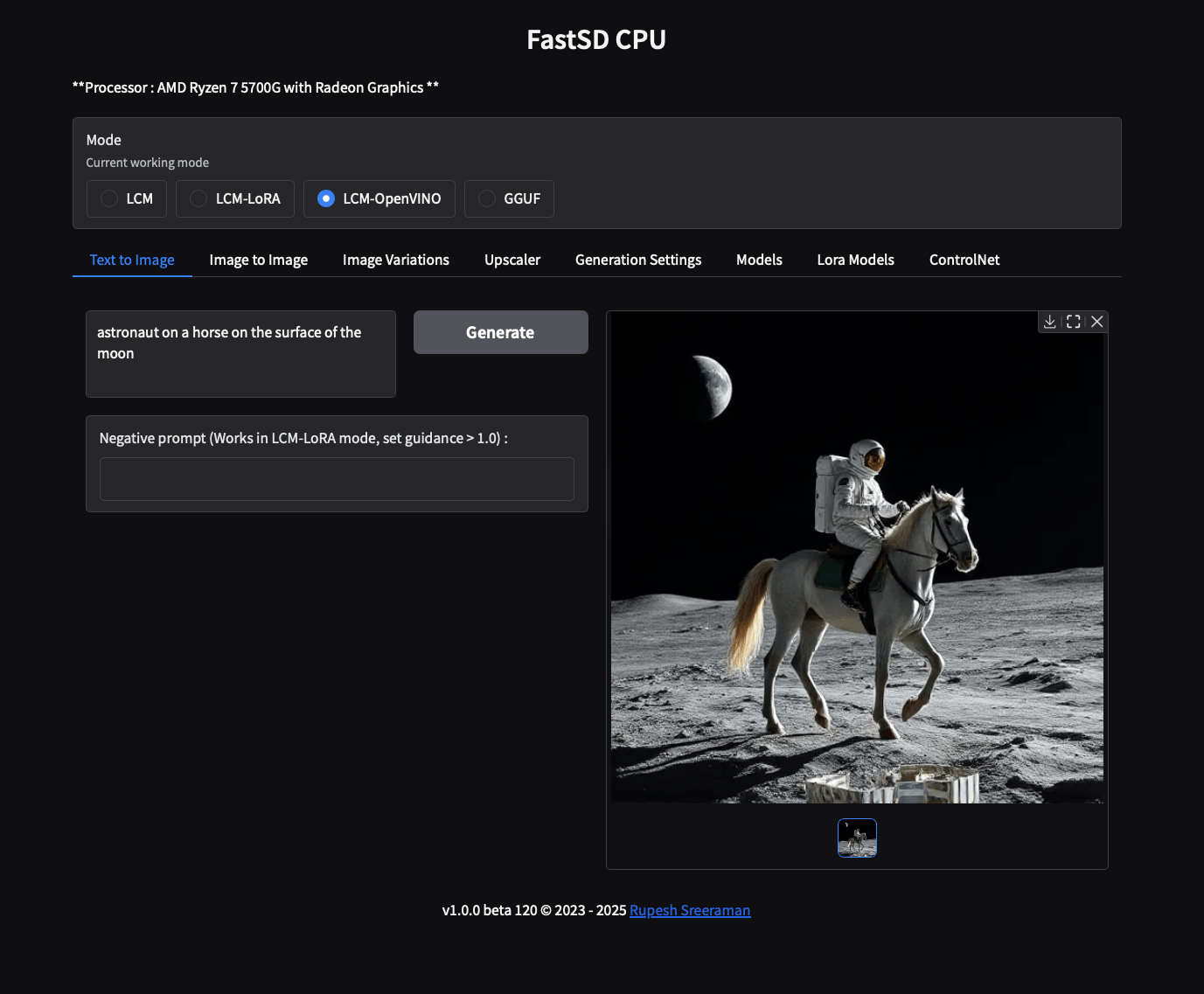

Once the container is running, visit http://your-server-ip:7860 in your browser.

For the best image quality you can use LCM-OpenVINO. For best performance you might start with LCM-LoRA. Despite which model you choose after submitting a prompt, you might have to wait a couple of minutes—depending on your internet speed, while the model is being downloaded. This is only required for the first time you run the model. Be patient and prepare to be amazed.

Bonus: Upscale#

Images generated on a CPU might be slightly noisy and lower in resolution—but AI can help with that too. There are many free tools for upscaling, my personal favorite is Upscayl—an open-source AI image upscaler.

Results#

Hardware

- 12 core proxmox VM (Ryzen 7 5700G)

- 80 GB RAM

(Refer to the fastsdcpu repo for the system requirements)

With this setup, I was able to consistently generate 512x512 images in under 200 seconds. That’s just over 3 minutes—not bad considering no GPU was involved.

After upscaling, the images are perfectly usable—even for publishing online.

Conclusion: Use What You Have#

While GPUs remain the gold standard for high-performance image generation—don’t underestimate the capabilities of your CPU. If you’re constrained by budget or hardware, don’t let that stop you from exploring AI art.

So, if you’re looking to dip your toes into the world of AI image generation without breaking the bank, give it a try. You might be surprised by what your humble CPU is capable of!